Abstract

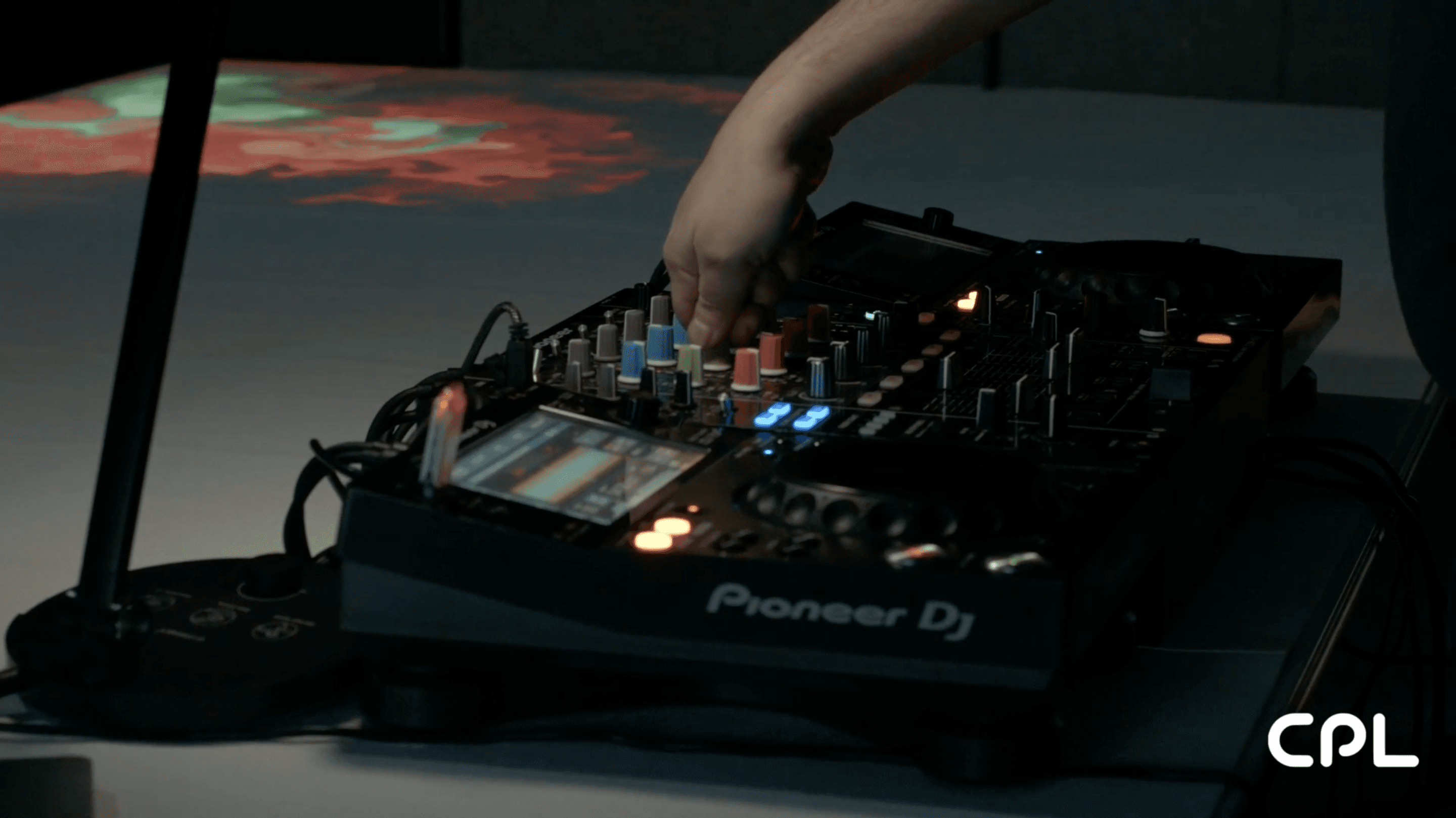

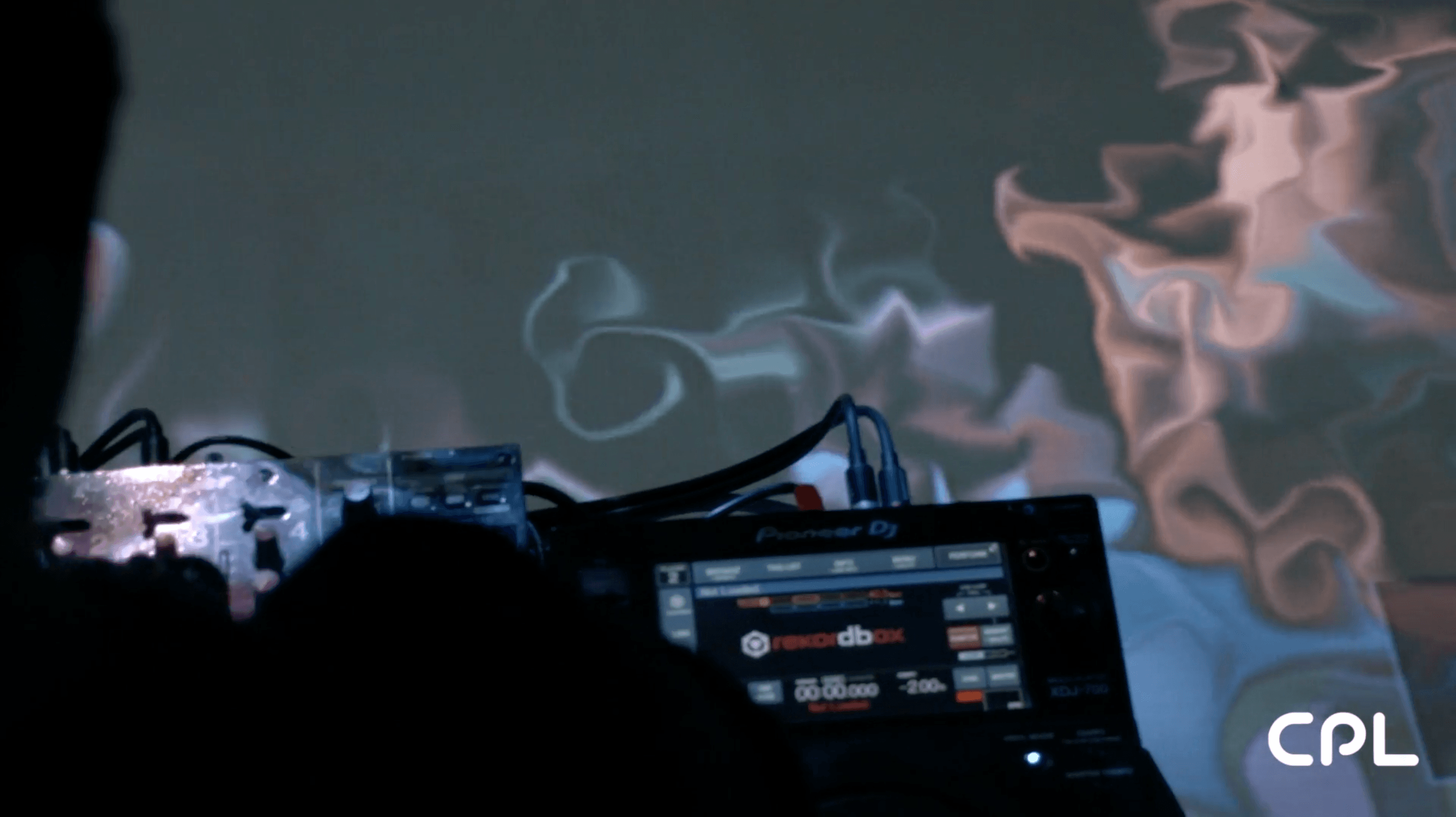

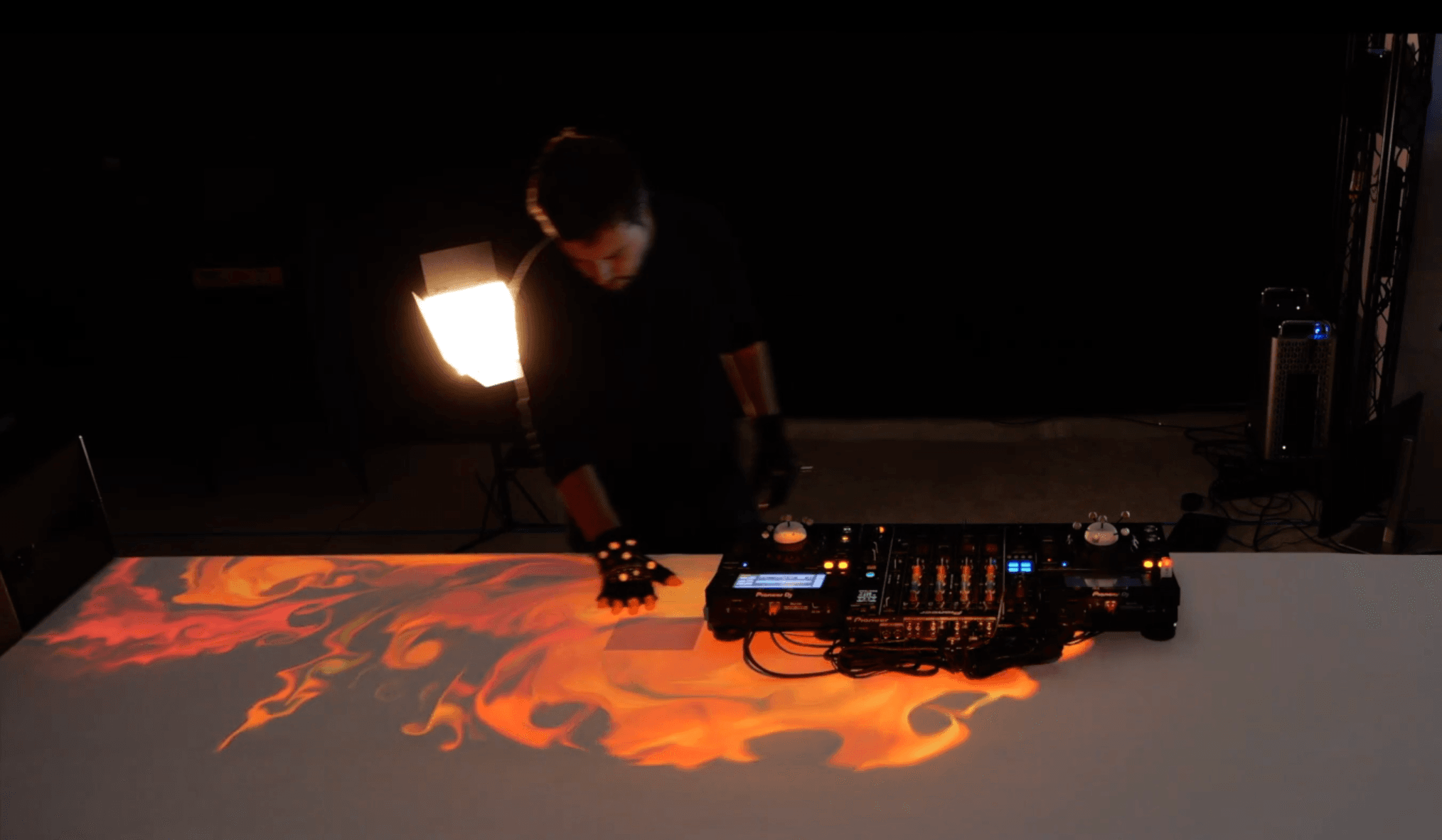

DJESTHESIA is a research project that uses tangible interaction to craft real-time audiovisual multimedia, blending sound, visuals, and gestures into a unified live performance. Combining a DJ setup (e.g., mixer and two decks) with motion capture and projected visuals, DJESTHESIA gives the possibility to control the music and visuals at the same time from the audio mix and DJ gestures, transforming the DJ into both a performer and a performance.

System Architecture

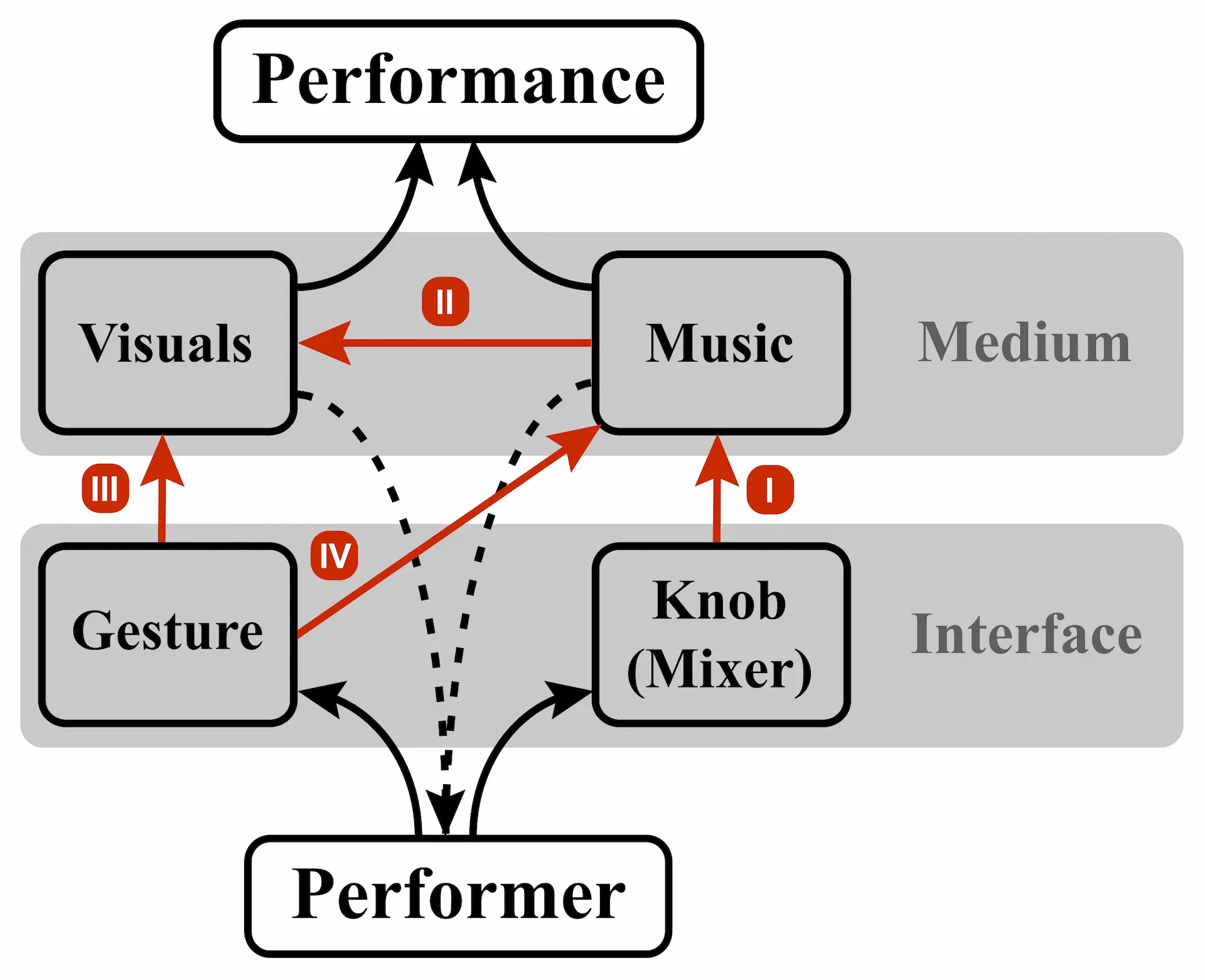

Fig. 1 - System Overview. DJESTHESIA supports four types of interaction between the DJ and the multimedia. I) “Knob” changes music. In this mode standard DJing is performed. II) Music changes visuals. In this mode, changes in the audio parameters (e.g., EQs) done through the mixer have a direct impact in the colors used to represent the music. III) Gesture changes visuals. In this mode, gestures and body movements give the possibility to interact (e.g., physically grab, release, summon, throw) with the visual representation of the music. IV) Gesture changes music. In this mode, gestures (e.g., 3D curves) can convey information to audio composition software to alter aspects of the music being played.

There are four modes by which the multimedia is composed, which can all be active simultaneously:

- Mode I: “Knob” changes music. The first mode is standard in most DJ setups. When a DJ uses a mixer and decks, the DJ changes the music by physically turning knobs, for example to increase the highs or lows or to change parameters such as filters or equalizers.

- Mode II: Music changes visuals. A low-latency audio pipeline routes the sound from the mixer to an audio composition software on a user computer. Then, a custom FFT plugin extracts real-time frequency data and maps it into RGB color space. The color information is sent to a web-based fluid dynamics simulation, which is finally projected onto the DJ working space.

- Mode III: Gesture changes visuals. A standard MoCap system is used for spatial gesture tracking. The DJ wears gloves with custom MoCap markers to interact with the generated visuals (e.g., physically grab, release, summon, throw).

- Mode IV: Gesture changes music. The same gesture-based interaction is used to craft the music. By using two-handed gestures, a DJ can draw EQ curves that will be applied to the music – even EQ curves that would be impossible to achieve in a mixer – and modify other parameters such as volume. This gives DJs a tangible interface to sculpt music in a spatial environment.

Workflow (Visuals)

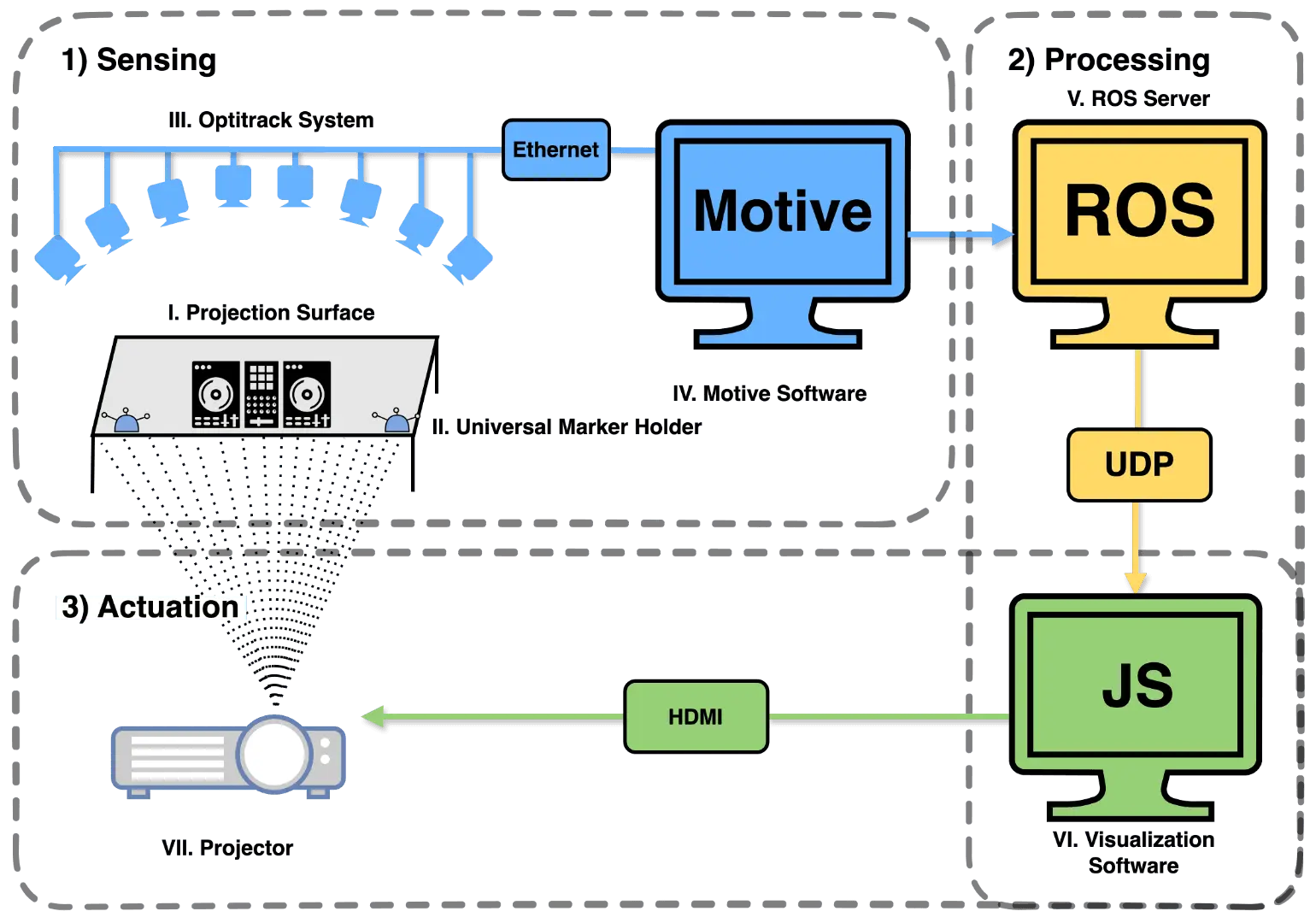

Fig. 2 - Visual pipeline.

Fig. 2 shows the visual information workflow in DJESTHESIA. 1. Sensing: The system uses a motion capture camera system (OptiTrack) to track the position and orientation of the Universal Marker Holders (UMHs). UMHs are normally place inside a glove to track the hands and gestures of the DJ. However, they could also be located on top of the mixer or the decks to capture the interactions between the DJ and the equipment. This information is sent to a tracking software (Motive 3D) for processing. 2. Processing: A server running the Robot Operating System (ROS) serves as an intermediary between the physical and digital information by processing the motion capture data and sending it to the visualization engine software via UDP. 3. Actuation: The visualization engine is a WEBGL fluid simulation software programmed in Javascript (JS). This software receives the position and orientation coordinates of the UMHs and maps them into the computer mouse positions. Then, the mouse receives a triggering signal from the audio analyzer. If it passes the threshold, the mouse will trigger a click. If not, the mouse will just follow the UMH coordinates. Finally, this information is projected onto the table where the DJ is performing.

DJESTHESIA can be extended to a wide range of use cases ranging from novel art installations to musical performances.

Publications

Gallery

Research Line

Tangible User Interfaces

Tangible User Interfaces (TUI) for human--computer interaction (HCI) provide the user with physical representations of digital information with the aim to overcome the limitations of screen-based interfaces. Although many compelling demonstrations of TUIs exist, there is still a lack of research on how TUIs can be implemented in real-life scenarios. …

View Research LineTeam Members

Eduardo Castelló Ferrer

Assistant Professor - School of Science & Technology

Saeka Ono

Research Assistant